INC Use Case Preview Feature

PREVIEW - This Use Case showcases features that may not yet be available to all customers. Note that functionality, scope, and design of preview features may change significantly in future. As we define the last technical details, requirements and steps may be subject to change.

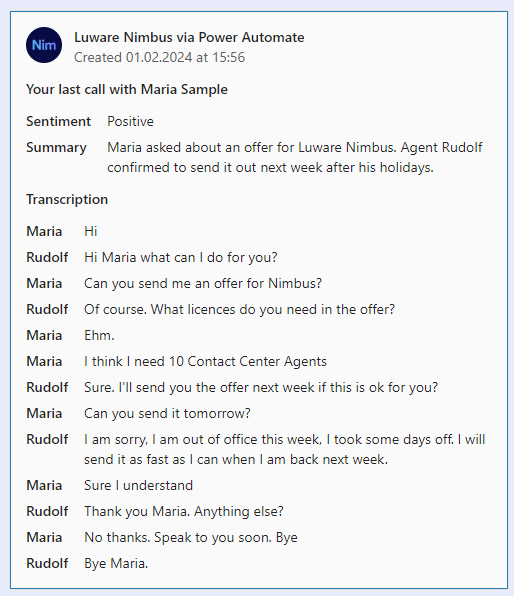

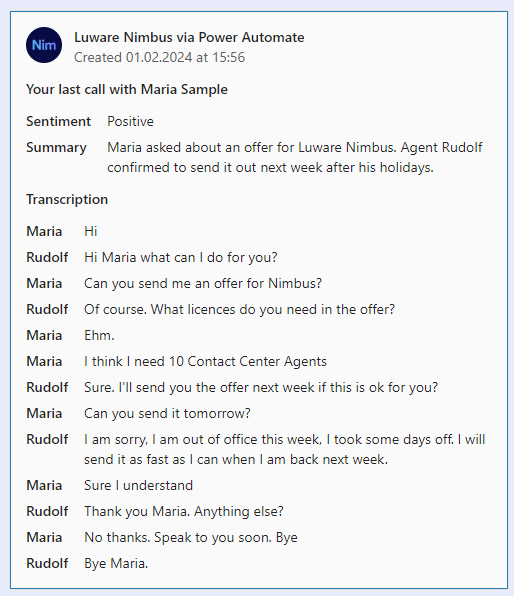

After a voice call, Agents might want to get insights of a transcribed call and Supervisors might want to get the Transcription stored in a CRM. In this Use Case, we send an Adaptive Card to the agent when the transcript is ready. It should provide information about the sentiment, summarize the text, and contain the complete script of the conversation.

Preconditions

- Before creating this flow, make sure you have the Transcription feature set-up for your Service. Follow Use Case - Setting Up Transcription on how to do this.

INC Icon Legend Accordion

Show Icon Legend

| 💡 = A hint to signal learnings, improvements or useful information in context. | 🔍 = Info points out essential notes or related page in context. |

| ☝ = Notifies you about fallacies and tricky parts that help avoid problems. | 🤔 = Asks and answers common questions and troubleshooting points. |

| ❌ = Warns you of actions with irreversible / data-destructive consequence. | ✅ = Intructs you to perform a certain (prerequired) action to complete a related step. |

Participant Types

In Power Automate, there are tree participant types used in transcriptions: Customer, User, and Other. The following table gives you more information on them:

| Customer | User | Other |

|---|---|---|

|

|

|

Overview of the Flow

Expand to see the overview of the flow…

Build the Flow

| Description | Screenshot |

|---|---|

| Start with the trigger “When the companion has an update” and select the Service. The event needs to be Voice Transcription ready. |  |

| You will use the Service session ID to get the corresponding transcription data. |  |

|

Then, prepare a couple of variable that we need: Variable “CleanText”, Type: String Variable “TranscriptionFacts”, Type: Array

💡 Cleantext will be used to analyse the text with AI 💡TranscriptionFacts will represent a node in the JSON of the adaptive card. |

|

|

In the Participants data, we want to filter the Agent portion:

Filter Array on the Transcription Data where items()?['type'] is equal to User |

|

|

In the Participants data, we want to filter the Customer portion: Filter Array on the Transcription Data where items()?['type'] is equal to Customer |

|

|

The Identifier/Id field holds the OfficeID of the Agent. We use it to get agent details from O365.

We need the UPN of the agent to send the adaptive card at the end of this flow. |

|

| Iterate through the Phrases… |  |

| …and also iterate through the Participants |  |

| Match the Phrase ParticipantId with the IdentifierId of the participant |  |

|

IF TRUE we fill the variables CleanText and TranscriptionFacts |

|

| Create the Clean Text string |  |

| Create the Adaptive Card Node |  |

|

Now we can use the “CleanText” for the AI text analysis. Variants: The text analysis can be achieved 💡In the following we continue with the data returned from Variant 1. |

Variant 1: Using the built-in AI builder Premium ConnectorIn our example we'll be using this variant. Here is an overview of what you need:

For the first element select the "AI Summarize" prompt and add the “CleanText” variable as input text. It is also recommended to add a textual context to the prompt. You can use this generic phrase and refine it according to your case.

“This is a transcribed conversation between a customer and a contact center agent.”.

The second element looks as follows. You could use the language information from the transcription data. Each phrase has a language property attached to it. We just set it to english to simplify our flow.

Variant 2: Using a deployed model on Azure AIIf you use this variant, you only need to make one request to your model using the HTTP element. Then parse the response and adapt the next step accordingly. It consists of these two elements:  The details of the HTTP request depend on your model. We are using GPT4o deployed in Azure.  The response can be parsed using a Parse JSON element on the message content from the HTTP request as follows:

|

| Finally, we send the adaptive card to the Agent using the User Principle Name and the following adaptive card JSON |

|

| This is what the final card should look like. |  |